Docker as tools for end-2-end testing in microservice ecosystem

Introduction to Four-layer model of microservice ecosystem

Susan Fowler in her book Production-Ready Microservices introduce a four-layer model of the microservice ecosystem. This model including hardware, communication, application platform, and microservices (from level 1 to 4). Hardware layer is responsible for the physical/cloud server, databases, operating system, resource isolation and abstraction, configuration management, and host-level monitoring and logging. This is where our microservice live.

| Layer |

|---|

| Layer 4: Microservices |

| Layer 3: Application Platform |

| Layer 2: Communication |

| Layer 1: Hardware |

The communication layer is responsible for how our microservice communicate to the outside world, including but limited to these technical problem, network, DNS, remote procedure call (REST vs gRPC), endpoints, messaging, service discovery, service resgistry, and load balancing. This layer is important part where good quality communication layer will help our app to keep communicated and accessible to/from the outside world.

The application platform layer is responsible for self-service internal development tools; development environment; test, package, build and release tools; deployment pipeline; microservice-level logging; and microservice-level monitoring. This is where our application developed by developer and a good application platform will help the developer be more productive. Last but not least, the microservice layer is where the microservice it self live and how we manage our microservice-specific configurations.

Application Platform Layer and Its Important

No model are perfect, but some are useful, the four layer model is no exception. From this model we can see that application platform layer is one of the important layer where our service is developed. This layer responsible for the productivity of our developer and how easy our developer get things done. In one of my clients (author: I can’t disclose it), we put a lot of effort to automation and self-service tools to make developers easy to develop, build and ship software. One of the aspect is end-to-end testing (e2e). The e2e testing in microservice ecosystem sometimes becomes tricky. Usually in integration test we want to test our application with mocked dependency service and see if our application can integrate with the whole ecosystem with the mocked service. In e2e test we want to test our app by integrate it with the whole “real” ecosystem, meaning with real service-dependencies (not the mocked version). This means we need to run our service and its dependencies entirely in a machine whenever we want to test.

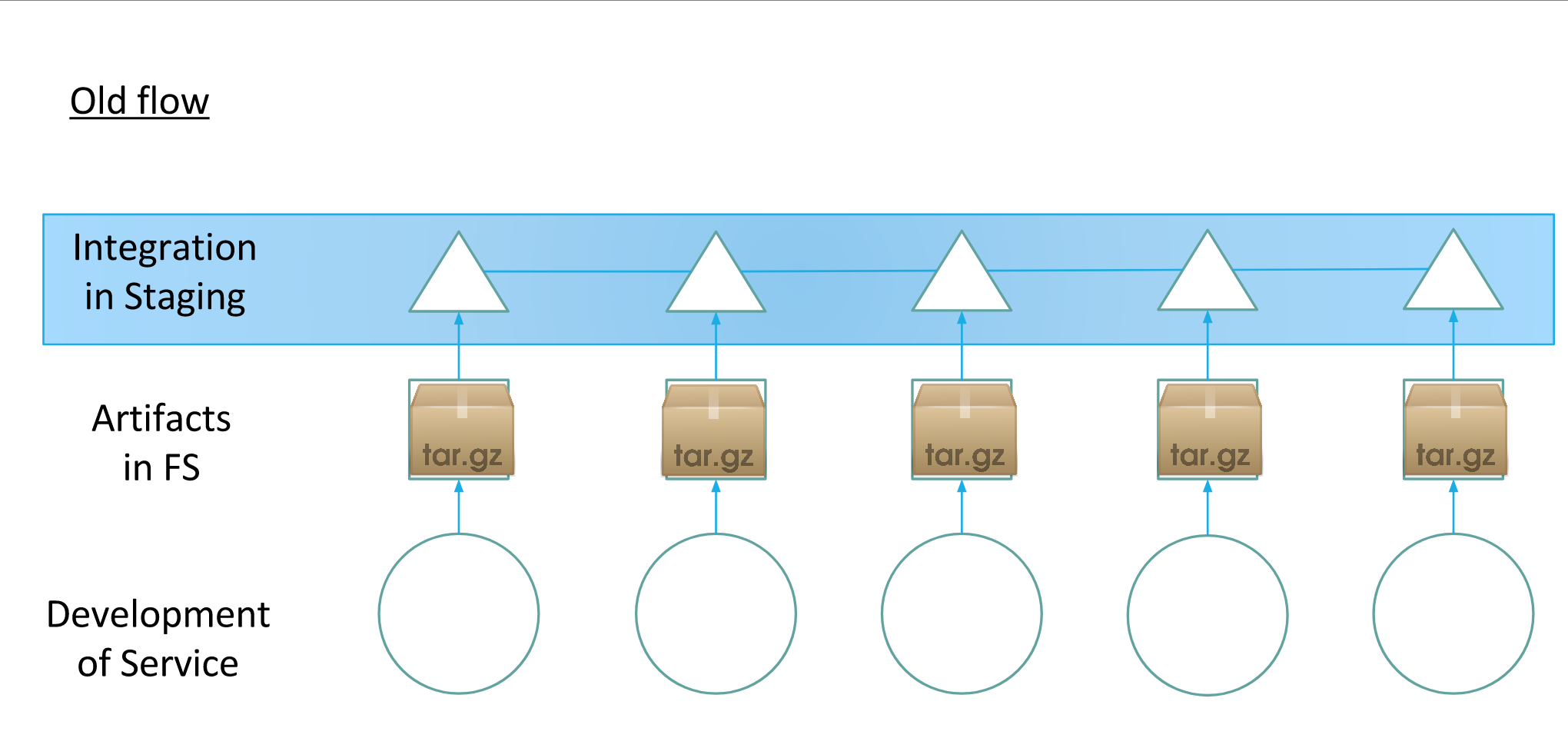

Old Development Pipeline Flow

To give more context, previously we use VM as the default resource abstraction, but as container technology becoming more mature and getting more adoption, we are on the way to migrate all the services to containerized application. Figure 1 below show the old flow of how we develop. Basically there is three stage, one is development of service, here we develop our software and whenever whe commit and push to the remote upstream repository, there is a CI working to build, test and push the artifact in file server. In stage one the test is only limited to unit test and integration test. Then move to stage two where our app’s artifact from specific commit and branch stored in file server, from here we can go to stage three which is deployment to staging by running a self-service deployment via Rundeck automation tools. Here we don’t talk about our deployment to production, this is still in staging. After the artifact is deployed to staging, whenever we have a deployment schedule, the PIC for the deployment need to run a semi-automated test in staging. Basically he/she need to trigger the test. Basically running the e2e test in staging have some caveats. Staging is wild, people from other team may doing something “creative” or even evil with staging database, configuration, etc and somehow make our semi-automated test flaky. This usually can take 2 hour to 1 day of our developer precious time. After months of painful deployment cycle we decide to employ docker as part of our CI pipeline and reduce deployment time. Second problem is because of the flaky nature of running e2e in staging, sometimes, we got false positive and false negative in testing, and fixing the staging to initial states is taking so much time.

Figure 1. Old flow of our microservice development.

For you who don’t know yet about container technology and Docker, it is basically the way we package our application in isolated environment without thinking about hardware like CPU, RAM, etc. It is not virtualization technology. When we run container we share many host resources. Though not completely isolated, Docker container isolate network and volume so we can manage disk and network in each container running together in single machine. One of the benefits of container is container can helps us to easily spin up our microservice in virtually any environment in a very short time. This fit our need when we want to e2e test our application. We can orchestrate a collection of service of our microservice ecosystem in any container orchestration tools like docker-compose or kubernetes, then run an automated e2e test there, then we can shutdown again the environment and spin-up again when wee need it (of course with the right configuration and routing). This way we can test any version of our app with any version of dependency easily.

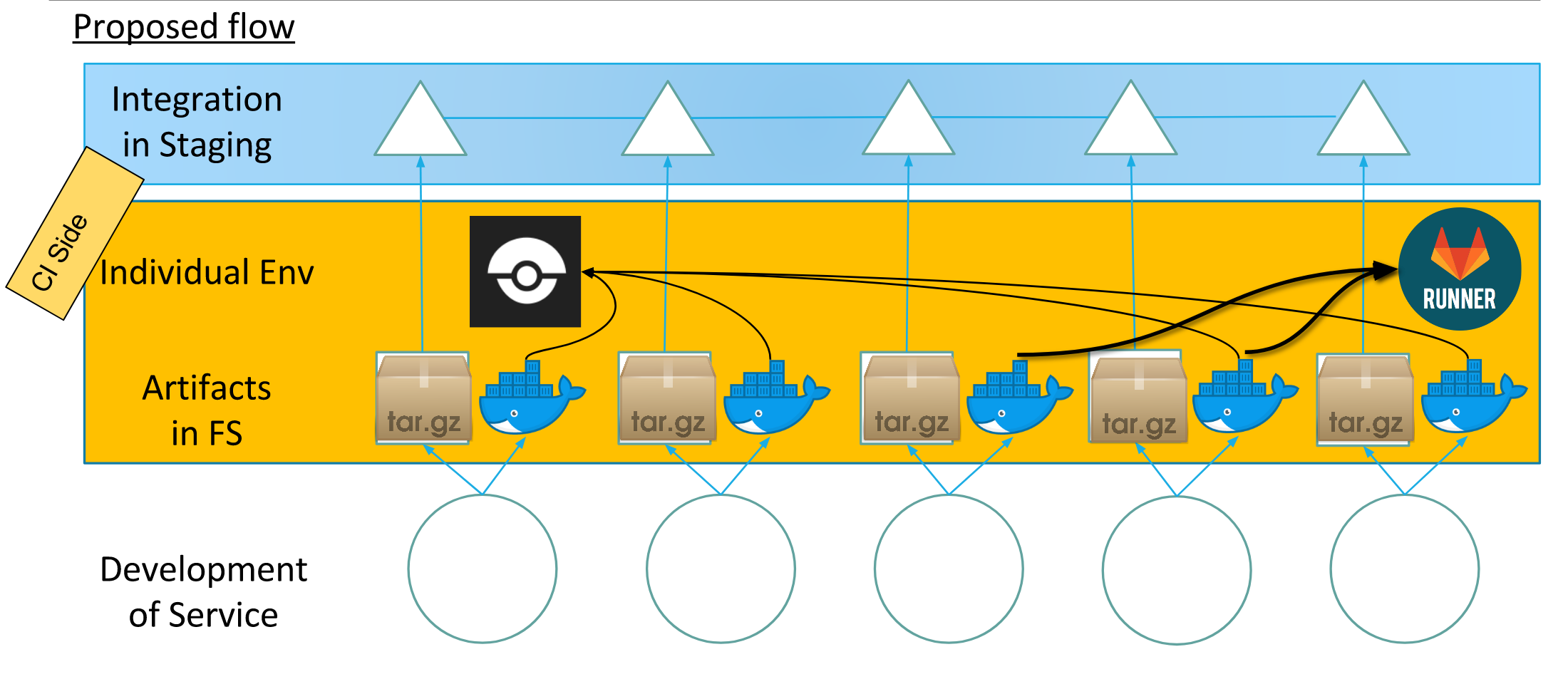

Proposed New Development Pipeline Flow

After some researches and thoughts, we spin up our first iteration of automated e2e test integrated in our CI to our core service (one of the largest service in my client). In this proposed flow, the different is in our CI pipeline every project should package their application in a Docker container and push to the container registry. Then any project can pull the container and can combine many different service in single orchestration file (in our case docker-compose file). The convention of every project having docker container even it is not currently used in production is useful for this purpose. Although this approach takes time because we need to communicate to every team that we need to have docker container build in their pipeline. However, since my client is on the way to adopt container as our main packaging system, and Kubernetes as our orchestration and cluster management tool, this doesn’t take a long time. You can also limit the scope to some services in a domain then start to other collection of services in other domain. We have some service domain, domain-specific business logic, email & sms notification service, client dashboard service, etc.

One of the advantages of this approach is, we can spin out our test environment regardless our CI pipeline tools. In my client we have various build pipeline tools, one use GitLab CI, other use Drone CI, and some use CircleCI. So, regardless of the preferred pipeline tools, this approach still relevant. Other advantages is we can easily spot an error if developer forget to add a new configuration, because the service won’t up or doesn’t behave properly if we forget to add config. This brings the developer to be diligent to update config in our configuration management service everytime a new feature which needs a new config merged to our development branch (we adopt GitFlow).

Figure 2. Proposed flow of our microservice development.

Result

After implementing new flow of our microservice development with e2e test running automatically in our CI pipeline, we achieve significant improvement. We manage to run our long e2e test with hundreds of tests down to only 25-40 minutes, it is a big achievement compare to spending 2 hours to 1 day developer time. In my client we believe that our developer time is precious and reducing every time wasted in every minute will have a great impact in our developer happiness.

In addition to the automated e2e test, we also add automated deployment after e2e test finished. The result was significant, beside cutting time for developer doing e2e testing, we also manage to have impact in our deployment cycle. After this model implemented, no more something called “prepare release” for developer who currently get a turn for release PIC rotation. Once a feature branch is merge to development, it automatically to tested with unit test, integration test, and e2e test then automatically deployed to staging. And since we have develop culture for any developer who onw any pull request, after it passed CI tests and reviewed thoroughly, the owner of the Pull Request/Merge Request should merge it to development then the release PIC only merge development to master and bump the version. Then tomorrow he/she can running deployment to canary then to production.

Conclusion

The Docker container technology helps us to increase our development velocity and developer happiness and fits microservice ecosystem like ours. Though not perfect, this seems a good start for development team with microservice architecture who have a service with many dependencies and want to e2e test their service.